Xcopy File Path Limit

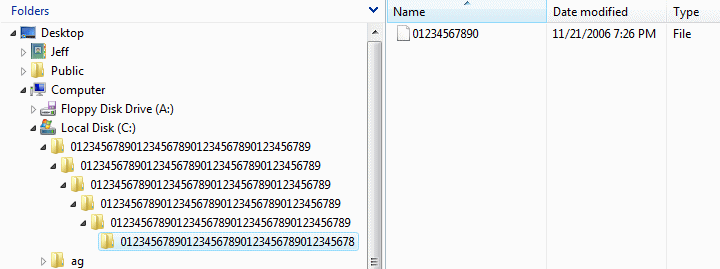

Posted By admin On 20.08.19This is a continuation of the post started September 01, 2009, with the last post on October 17, 2011 named Since this is an ongoing unsolved issue, and the thread was locked, it continues here. It is ever so easy to create a long file/path using explorer.exe as will be shown below. The questions and discussions on the issue of File Names are too long, cannot copy, are. There appeared no viable easy solution(s) for non-technical users in that locked thread.

Jul 28, 2011. You can map your way up the directory tree using Subst or Net Use to allow you to avoid the length limit, I have successfully used that in combination with Robocopy to get very long paths and file names. But then newer versions of Robocopy ARE supposed to handle paths and files names longer than 255.

There was no other similar open thread. One roundabout method of achieving the goal of copying a long path/file is given above, but it is cumbersome. Hopefully the discussion and questions will continue, leading possibly to an answer that is convenient. Would anyone happen to know if this conundrum will continue into Windows 8?

The questions and discussions on the issue of File Names are too long, cannot copy, are. There appeared no viable easy solution(s) for non-technical users in that locked thread. There was no other similar open thread.

One roundabout method of achieving the goal of copying a long path/file is given above, but it is cumbersome. Hopefully the discussion and questions will continue, leading possibly to an answer that is convenient. Would anyone happen to know if this conundrum will continue into Windows 8? Thanks free404!

Would you mind adding the question to the top of your first post? That way we can try to get an answer to the specific question? Otherwise, would you be up for changing this to a Discussion type instead? Ed Price a.k.a User Ed, Microsoft Experience Program Manager (, ).

UNIX, the basis of Linux, was developed 40 years ago! Somehow Linux can get it right but Microsoft can not. But then again, the volunteer Linux developers work like professionals, while Microsoft is a poorly managed business that fritters away billions of dollars in development costs while producing technology that is retrograde. TO MY POINT, not ONE post related to Windows file name length problems includes a reply with Microsoft's approach to FIXING the OS file path length problem. That is because Microsoft does NOT focus on creating solutions - they focus on SALES and any quality that might arise is literally a side effect.

Linux developers focus on quality, and that is why their FREE operating system is still better than Windows 40 years after UNIX was written. I wish that were not true, since I paid for Windows 7, but it is true.:-b. I'm a newbie to this forum, though my group at the company I worked for got our first PCXTs in 1984 and those insane IBM people went and Really Over-did it and put a 10 - Repeat TEN Megabyte Hard Disk in them. What in the world could anyone do with so much space??? We had DOS (PCDOS?) 1.1 or some such and the DOS program Displaywrite word processing software on it and we had to buy the cabling and software in order to convert the data from our 8' toaster drives on ourIBM 'Displaywriter' word processor over to the XTs. And, yes, I'm older than dirt.g.

Since that momentous time, I've been associated with a number of so called PC / X86 variants. Plus, I've said things too many times to count about Microsoft that would make a hardened sailor blush. With that said, Jim, AKA 'Why use one word where two will do' from some 2011 posting was about the best and rational explanation and concise question: 'WHY HAS MICROSOFT ALLOWED US TO CREATE PATHNAMES+ FILENAMES OF SUCH A LENGTH THAT EVEN THE SAME SOFTWARE CANNOT COPY THEM?????' The man had a good question.

I understand your asking free404 to add their question to the top of the first post, but, uh, er, um, don't you work for said company? Now, I understand that more often than not, a company will listen to a potential customer than someone sitting next to them. Crazy, but I've seen it many, many times. And, I believe Scott Adams with Dilbert covers such things pretty well. But, back to the point. I'll pose my own version of the question: WHY HASN'T MICROSOFT COME UP WITH A FIX/UPGRADE TO IMMEDIATELY CORRECT THIS PROBLEM?

Sure, its easy for me to propose such a question and perhaps not so easy for a programmer to sit down for 10 minutes and write the code to make this fix, but I can still ask. I get numerous upgrades from Microsoft all the time and I assume those are designed to do something useful. Yes, they give an explanation of what each file is supposed to do, but I'm sure there is more to 'This fix helps Windows 7 correct a significant issue with the system inertial dampers.' .smile. Anyway, just for academic reasons if nothing else, what is the path from yourself, maybe even including letters like this one, to go through the MS bureaucracy and actually get to a programmer who is assigned to actually write a program with the intent to sending it out with other upgrades? I have a feeling there are quite a few steps between here and then and the vast majority of ideas don't get past the first reviewer and die on the vine. But, that's just one man's opinion.

Have a good week, Randy Ps: If you have a way to send my message to Jim, AKA 'Why use one word where two will do', please do that for me. He may have setup an ID just for that one posting and not use his ID all the time and I understand such things. Thanks, TRW.

I don't know if you need this but. You could use attrib, xcopy and dir from a batch file or just at the command prompt use attrib/? For more info on switch's use this from the root folder like C: attrib +a /s this will turn the archive attribute on then do C: xcopy source destination /m then xcopy only copy's files with the archive attribute on and turn's it off after it's been copied. Then you can use C: dir /s /a:-a filesnotcopied.txt and this text file will have all the files not xcopyed.

I guess it would be worth it to test xcopy first and see if it craps out like copy. ROBOCOPY copies long paths, but it's a command line tool. I found a low-tech solution for locating the files with long paths (this was on a Windows 2008 server).

I realize this is only practical for searching folders with a relatively small number of offending paths. In DOS, CD to the folder you want to search. Do a directory listing with the /s switch.

I piped it to a text file, for example dir /s list.txt. The DOS window will then display only the file paths that are too long. Use the DOS window's 'select all' and 'copy' tools to paste the results into another text file.

This is a not a 'solution'.but a 'work around': a low tech fix.for error message 'your file name is too long to be copied, deleted, renamed, moved': 1. Problem is this: the 'file name' has a limit on number of characters.the sum of characters really includes the entire path name; you gotta shorten it first (i.e, if the total number of characters in the file name + Path name are over the limit, the error appears). The deeper your file folder sub levels are, the more this problem will come up, especially when you copy to a subfolder of a subfolder/subfolder of another path.adds to character limit) 2. How do you know which combined file names + path names are too long if you are in the middle of a copy operation and this error pops up? Some files copied but the 'long files error message' says 'skip' or 'cancel'.

But not which files are the 'too long' ones. If you hit 'skip' or 'cancel' the 'too long' files are left behind.but are mixed in with non-offender 'good' 'short name' files. Sorting thru 1000s of 'good' files to find a few 'bad' ones manually is impractical. Here's how you sort out the 'bad' from the 'good': 4. Let's say you want to copy a folder.' Football'.that has five layers of subfolders; each subfolder contains numerous files: C:/1 Football / 2 teams/ 3 players/ 4 stats/ 5 injuriessidelineplayerstoolong There are five levels root '1 football' with subfolders 2, 3, 4 and lastly '5 injuries' 5.

Use 'cut' and 'paste' (for example to backup all five levels to a new backup folder): select '1 football'.cut.select target folder.paste ('cut' command means as the files are copied to the target destination, the file is deleted from the source location) Hint: avoid 'cut' and 'paste' to a target folder that is itself a sub/sub/sub folder.that compounds the 'characters over the limit' problem.because the characters in the sub/sub/sub folder are included in the 'file name character limit'.instead 'paste' to a C:/ root directory. Suppose in the middle of this operation.error pops up: '5 files have file names that are too long' Skip or cancel? Select 'skip'.and let operation finish 6. Now go back and look at the source location: since the software allows only the 'good' 'short name' files to be copied (and because you 'skipped' the 'bad' 'Long name' files so they are not copied or deleted).all that remains in the source location are the 'bad' 'long name files' (because 'good' ones were deleted from the source location after the 'cut' operation.the bad ones stick out like a sore thumb. You will find.all that remains in source folders are: the 'bad' 'too long' files; in this example the 'bad' file is in level 5: C:/ 1 football / 2 teams /3 players /4 stats /5 injuriessidelineplayerstoolong 8.

Select folder 5 injuriessidelineplayerstoolong (that's right.select folder, not file) gotta rename the folder first. Hit F2 rename folder.folder name highlighted.delete some of the letters in the folder name: like this: 5 injuriessidelineplayers.you should delete 'toolong'.from the folder name 10.

Then go into folder 5.and do the same operation.with the too long file name: hit F2 rename file.file name hightlighted.delete some of the letters like this: injuriessidelineplayers.you should delete 'toolong' from the file name 11. Cut and paste' the renamed file to the target backup folder. The Error message will pop up again if you missed any 'bad' files.for example, it will indicate '5 files too long'.then repeat process 5 times until you fix all of them 12.

Finally, copy the target destination files back to the source location (when you are certain all source location file folder locations are empty) Of course, this 'makeshift' solution would not be necessary if MSFT would fix the problem. It doesn't require any extra software and you don't have to rename anything. 1. In the root of the location that you want to copy your files to, create a folder with a single character name - I called my folder '1'.

Copy the files into there. Drag the files from the newly created folder that's in the root, to the location that you want them. Note, this works because step 3 only moves the files - it doesn't copy them.

Note: This doesn't have to be the root of the server, just an area as high up the directory tree as possible.

The registry is actually fairly basic, it's just also huge and pretty poorly documented. Yes, you can screw up your computer pretty good if you do the wrong thing, just like messing with stuff in the system directory, or in ancient history, in the dos directory, but that's why you should be careful, have a backup, and don't play around or randomly experiment. Anyone who feels comfortable changing a simple registry entry is almost guaranteed to be able to do this without issue.

Anyone who isn't probably doesn't even know what this change even does in the first place. If you have problem with the registry how do you cope with the file system with all its folders? The problem with the registry isn't that it is hard to navigate a hierarchical database, but the way it is being used, apparently to deliberately obfuscate the way applications are configured. As a result, it has become an obscenely hideous structure - compare this to the traditional UNIX style of configuration, in simple text files requiring just a text editor and a manual telling you how to do (another thing that is very often absent in Windows).

And even if the manual doesn't exist, you can often make re. The registry is supposed to enforce a common method of storing settings across all Windows apps. Of course, that laudable idea has then been tested by twenty odd years of pretty much everyone writing software for Windows in their own special way, and maybe about 10% bothering to follow Microsoft's standards. And of course, even different departments of Microsoft have their own ideas of how things should be done, so Office does things differently to SQL Server and so on. Tl/dr the idea behind the registry wa. There's undoubtedly a lot of code out there that has: #define MAXPATH 260 wchar bufferMAXPATH; And by 'legacy crap', you presumably mean 'every version of Windows, ever'.

Because this has been the rule for Windows unless you did some funky tricks with your paths ('? D: very long path'), which gives you paths up to 32K in length.

It's about time they did away with that limit. It's sort of rare, but you can occasionally run into path limits, especially with deeply nested computer-generated filenames, etc. So, no, this shouldn't cause an issue unless a developer is stupid enough to put the required manifest information in without actually ensuring the code can handle the longer paths/filenames. Even if Windows hides paths longer than 260 from legacy apps. What, exactly, will Windows return for a call to GetCurrentDirectory, when a legacy app runs from a path longer than that? What happens when the user tries to explicitly load or save a file from such a path (as in, paste the too-long path directly into the file dialog, which then tries to stuff it into a variable defined as 260 characters long)?

I can't see any way for this not to break a ton of legacy apps, in potentially dangerous ways, regardless of whether MS checks their manifest. It's about time they did away with that limit. It's sort of rare, but you can occasionally run into path limits, especially with deeply nested computer-generated filenames, etc. For some reason I keep on running into it with Workplace Health and Safety people who like to set policies of incredibly long directory names and filenames with punctuation in them - plus very deep nesting while repeating part of the name of the directory above. Having a.nix fileserver I can rename things for them when they fuck up.

There's undoubtedly a lot of code out there that has: #define MAXPATH 260 wchar bufferMAXPATH; Undoubtably because that's defined as such in the Windows.h header file for both VS and the GCC off-shoots. Microsoft has known for years that this was a problem, but they also acknowledged the pain that a change like this would cause for thousands of developers and even more so for the poor bastards like me who have to support end of life software where we can't simply make a change and then recompile the source code to fix this problem. I kind of wanted them to leave this one alone, but I guess they are d. Why not just turn this on by default? If this breaks some kind of DOS convention, then it's likely only relevant to enterprise users running some legacy crap, and assuming they run Windows 10 at all, I highly doubt they're going to upgrade to this build any time soon anyways.

Because it was not traditionally a registry setting but a compile time setting. Software has to be updated to use the new capabilities, and most software probably won't be as the majority of software dependent on this issue will have by now been upgraded to use the Win32 Unicode/Wide-String APIs that have the 32k byte limit instead of the 260 byte limit in the Win32 ASCIIZ/ASCII-String APIs (CreateFileW vs CreateFileA). And that's also assuming that a simple re-compile will do the trick; unfortunately most. At work it happens all the bloody time. We have a very large file share, around 10 TB, of files generated when we do projects for our clients.

Frequently our account execs will try to organize one of our larger client folders and end up nesting files and folders so deeply that the data becomes inaccessible. It's pretty easy to do when many documents are generated by mac users, who give zero fucks about file and folder name length. Also, I will bet that if you fire up powershell and do a 'get-childitem. It's quite a common issue on very large projects where there might be a network file system based file repository instead of a document management system. You'll quite often see insanely deep directory structures to keep things organized and try and let people find exactly what they are looking for (which seldom work, because there's.always. a bunch of files that refuse to be pidgeon holed like that).

Generally not a problem on network servers, but when you try and copy a chunk of the directory tree over. I've seen paths that tried to be longer than 260 characters with archives off of Usenet. Some idiot will use the filename as a text message. When it gets extracted the path becomes somestupidlylong200characterfilename/somestupidlylong200characterfilename.ext.

Since the path then becomes too long, extraction fails. The above isn't really a legitimate filename. It's being abused. But for a legitimate example, a common way to organize a HTPC movie collection is the format Movies firstletter Title Title.mkv. So Finding Nemo for instance would be Movies F Finding Nemo Finding Nemo.mkv.

If you have a very long movie title ( imdb.com) then you legitimately would have a path too long if you used the full movie name. There are easier ways. Use MKLINK to create a symbolic link deeper into the path so that Explorer can work with a shorter path. If it is a network share use NET USE to map a drive letter deep into the share path. Use SUBST do do the same for local file systems, that is to mount a folder deep in the file path as a logical drive. From a command line (cmd.exe) you can address long file paths with '?

- Codegena 583 × 575 - 47k - jpg wondershare.com Top 5 ZIP and RAR Password Crackers 584 × 497 - 25k - jpg ultimate_zip_cracker.e. Ultimate ZIP Cracker - Download 411 × 387 - 24k - jpg ultimate-zip-cracker.s. Ultimate ZIP Cracker - latest version 2018 free download 574 × 486 - 69k - png codegena.com How to Open Password Protected Zip File without password? Ultimate zip cracker mac. Download Ultimate ZIP Cracker 8.0.2.11 584 × 503 - 14k - png ultimate_zip_cracker.e.

Drive Letter%File or directory path%'. Most commands work, but some, like RENAME will have trouble because it interprets the '?' As a wildcard. Because as stated in another thread, MS/W devs do `char pathMAXPATH;` So if MS removes the limit most programs will stack overflow. You may mean buffer overflow here. The declaration you've quoted would be resolved at compile time, not run time.

So if MAXPATH was 260 at compile time and then run on a system where the runtime behavior allowed for longer paths, I could certainly see a buffer overflow condition happening. But the program will only ever allocated 260 bytes off the stack in that case, so stack usage would remain the same. And I assume if MAXPATH were UI64MAX (or whatever) at compile time, the compiler would complain. The might stack overflow, depending on how they are written they also might do other things.

I bet there is a fair amount of strncpy(foo, bar, MAXPATH); out there as well. Which could lead to strings that are not null terminated but also don't overwrite, the result probably being some kind of crash when foo is read, the other possibility is truncation. A Only the first 260 bytes of path get copied the result is some later action is taken on the partial path. Maybe that fails, maybe that results in a n. I misread that as MSJW devs, and now I'm really hoping that doesn't become a thing. Their code would never run successfully: - They'd consider it every program's right to call abort; without being criticized.

They could only use peer-to-peer architectures, as master / slave is oppressive. All branching logic would initially be floating-point -based, as Boolean logic is exclusionary and thus a micro-aggression against LGBTQ culture. However, it would later be decided that forcing branching logic was inherently judgmental, and thus all program instructions must have an equal chance to execute every processor clock tick. The one upside is that their code would be meticulously designed to avoid race conditions.

Sadly, it would also be subject to nearly constant deadlock. This isn't just something you can switch on without thought. Windows' native programming has long had a 'MAXPATH' constant, which devs would use to create a charMAXPATH to accept user input (i.e. From a save file dialog). If you suddenly start creating paths larger than this, you risk buffer overflows. Even if your app is carefully written to avoid buffer overflows in this situation, it may simply refuse to read the file with a path too large. Devs have been able to break beyond MAXPATH for a while by using UNC paths, but almost nobody uses them because you'll find random apps that won't know how to use a longer path.

Xcopy File Path Limits

I find it a bit weird that they haven't taken an approach similar to high DPI, where you can embed a manifest resource into your app that'll tell the OS it supports high DPI. While this would not solve random apps refusing to work with larger paths, this would at least prevent buffer overflows. Windows' native programming has long had a 'MAXPATH' constant, which devs would use to create a charMAXPATH to accept user input (i.e. From a save file dialog).

If you suddenly start creating paths larger than this, you risk buffer overflows. It appears Microsoft assumes that only shitty programmers write code for Windows. MAXPATH is a compile-time constant (#define in windef.h). Even if you declare char mypathMAXPATH variable (surely, you mean char mypathMAXPATH+1, right?), you wouldn't just pass it in into some other function expecting exactly MAXPATH characters to be written into it, right? Surely, you'll also pass in MAXPATH as the number of chars you are expecting to get, right? Something like strncpy( mypath, otherpath, MAXPA.

Not file names - file PATHs longer than 260 characters. As in: 'C: Users Fubar Pictures Vacation 2013 Hawaii Dole Plantation Silly Photo with Sister 023.jpg' Obviously, that's under 260 characters, but if you try copying an entire user profile to another computer's desktop folder 'C: Users Foo Desktop old profile', you get an even longer character path. And some people have very elaborate Documents folders for work and school projects that are many nested folders deep and lots of characters for descriptions. I've hit the character limit more than once myself - especially with MP3 files with full band and song titles in the name and a few project files, but I've hit it multiple times copying entire profiles to servers as backups before swapping out a machine. This was quite a long time ago (first half of 2008), so my information is out of date. It does seem like the sort of thing that would have been fixed, though.

I was assessing version control systems for an internal project. Git was not ready to work properly on Windows, and hard for normal users, Hg had that bug, Subversion was too slow (12 minute checkout time for the trees concerned.) Bazaar came out on top. It worked properly on Windows, was 3x faster than SVN on Windows (and moved up to being more like 6. I was at a company which developed a large CRM application and I was the person who tarred up software updates to send to sites. A small part of the application was in Java, and the Java programmers were enamoured with class names which emphasized descriptiveness over brevity. We ended up with some files where path+filename exceeded 255 characters, and tar broke. My fix was to tell the programmers to shorten their damn file and directory names.

(This was about 15 years ago, and it would have been Gnu tar. Back in the day when most engineering applications were on unix machines and they were being migrated to windows workstation, the path name limitation was a big issue. PTC (Parametric technologies corp, a vendor of CAD/CAM software) would typically use a MAXPATHLEN of about 10.BUFSIZ but user can configure it to be bigger if they needed it. And its parts libray used very long hashes for file names.

Everything from part name, author name, version number, creation date gets munged into the file names, bearinghousingdjt4v.prt or something like that. They found the 8.3 file name format very confining. So they did a simple hack.

They would construct the file/path name just as they would in unix. Then send it through a string processor that will insert a ' ' after every 8th char and keep creating sub directories to get the file name they wanted! User will see humongous file names and path names. Our company has been supporting 4K path names now, I remember setting MAXPATHLEN to be 1025 (remember to allocate space for the trailing null) back when joined the company decades ago. I want to be excited about Windows 10 but I can't. Please, please, please give me an official option to turn off telemetry like the Enterprise version has.

Xcopy File Path Limitations

I'm also interested in the Long-term Servicing Branch. Thanks, A home user and family IT guy. Are you new at this? Just curious, because Microsoft hasn't been listening to their user base in years. Oh, and the Enterprise version will be fixed soon with the next round of involuntary updates, so don't worry about being left out from those telemetry 'features'. This doesn't work on Home or Pro editions. It's equivalent to setting feedback/diagnostics to 'Basic' which still enables a minimal amount of telemetry.

(Not that I don't think people who have a problem with small amounts of software telemetry aren't ridiculous as it's almost garaunteed that many other devices and software they use also have telemetry features (eg: video game consoles, phones, cars, etc). It appears that in some industries workplace health and safety documentation policies are exactly that kind of stupid. Why I have no idea, but so many tedious artificial emergencies have cropped up from people losing access to their stuff due to insanely deep nesting with even more insane long filenames.

The really hilarious (after the things have been recovered) side of it is being able to write stuff with a long path but not being able to read it back later. 'I've got a meeting in five minutes and I've cop.